Research

Technology-Based Assessment

In the last decades, the digitalization of educational content, the integration of computers in different educational settings and the opportunity to connect knowledge and people via the Internet has led to fundamental changes in the way we gather, process, and evaluate information. Also, more and more tablet PCs or notebooks are used in schools and—in comparison to traditional sources of information such as text books—the Internet seems to be more appealing, versatile, and accessible. Technology-based assessment has been concerned with questions of comparability of test scores across test media, transferring already existing measurement instruments to digital devices. Nowadays, researchers are more interested in enriching the assessment by using interactive tasks and video material or make the testing more efficient using digital behavior traces.

In the last decades, the digitalization of educational content, the integration of computers in different educational settings and the opportunity to connect knowledge and people via the Internet has led to fundamental changes in the way we gather, process, and evaluate information. Also, more and more tablet PCs or notebooks are used in schools and—in comparison to traditional sources of information such as text books—the Internet seems to be more appealing, versatile, and accessible. Technology-based assessment has been concerned with questions of comparability of test scores across test media, transferring already existing measurement instruments to digital devices. Nowadays, researchers are more interested in enriching the assessment by using interactive tasks and video material or make the testing more efficient using digital behavior traces.

My research in this area is aligned along this dichotomy of comparability of test data gathered on different devices vs. enrichment of the assessment. For example, colleagues and I try to answer fundamental questions about declarative knowledge with a smartphone App: How many dimensions of knowledge have to be distinguished (e.g., humanities vs. natural sciences)? Does knowledge differentiate with age? Is it possible to predict cheating behavior based on auxiliary data such as reaction times and page defocusing events?

Selection of recent publications

- Schroeders, U., Achaa-Amankwaa, P., Walter, J., Endlich, D., Hasselhorn, M., Golle, J., & Goecke, B. (2025, June 10). PINGUIN – Assessing Elementary Students’ Initial Competencies. PsyArXiv.

preprint syntax / data - Achaa-Amankwaa, P., Walter, J., & Schroeders, U. (2025, January 9). Wordle - A Game-Based Assessment of Verbal Ability? PsyArXiv.

preprint reg. report - Schroeders, U., Schmidt, C. & Gnambs, T. (2022). Detecting careless responding in survey data using stochastic gradient boosting. Educational and Psychological Measurement, 82(1), 29–56. https://doi.org/10.1177/00131644211004708

open access - Steger, D., Schroeders, U., & Wilhelm, O. (2021). Caught in the act: Predicting cheating in unproctored knowledge assessment. Assessment, 28(3), 1004–1017. https://doi.org/10.1177/1073191120914970

open access syntax / data

Intelligence Research

Our understanding of intelligence has been — and still is — significantly influenced by the development and application of new testing procedures as well as novel computational and statistical methods. In science, methodological developments typically follow new theoretical ideas. In intelligence research, however, great breakthroughs often followed the reverse order. For instance, the once-novel factor analytic tools preceded and facilitated new theoretical ideas such as the theory of multiple group factors of intelligence. Therefore, the way we assess and analyze intelligent behavior also shapes the way we think about intelligence.

Our understanding of intelligence has been — and still is — significantly influenced by the development and application of new testing procedures as well as novel computational and statistical methods. In science, methodological developments typically follow new theoretical ideas. In intelligence research, however, great breakthroughs often followed the reverse order. For instance, the once-novel factor analytic tools preceded and facilitated new theoretical ideas such as the theory of multiple group factors of intelligence. Therefore, the way we assess and analyze intelligent behavior also shapes the way we think about intelligence.

I’m mainly interested in an adequate measurement of crystallized intelligence (gc) or, more precisely, declarative knowledge. Despite its enormous importance and ubiquitous nature, gc is often neglected and has aptly been labeled the “dark matter of intelligence”. This is most likely due to the difficulties of its measurement, because as Cattell (1971, p.143) worded “crystallized ability begins after school to extend into Protean forms and that no single investment such as playing bridge or skill in dentistry can be used as a manifestation by which to test all people”. Colleagues and I try to answer fundamental questions about declarative knowledge using modern technology. In a smartphone-based study with more than 4,000 knowledge items we explore, how many dimensions of knowledge have to be distinguished (e.g., humanities vs. natural sciences)? Or, does knowledge differentiate with age?

Selection of recent publications

- Schroeders, U. (2025, September 6). Crystallized Intelligence: Exploring the Dark Matter of Intelligence. PsyArXiv.

preprint - Watrin, L., Schroeders, U., & Wilhelm, O. (2023). Gc at its boundaries: A cross-national investigation of declarative knowledge. Learning and Individual Differenes, 102. Article 102267. https://doi.org/10.1016/j.lindif.2023.102267

preprint syntax / data - Watrin, L., Schroeders, U., & Wilhelm, O. (2021). Structural invariance of declarative knowledge across the adult lifespan. Psychology and Aging, 37(3), 283–297. https://doi.org/10.1037/pag0000660

preprint syntax / data - Schroeders, U., Watrin, L. & Wilhelm, O. (2021). Age-related nuances in knowledge assessment. Intelligence. Advanced online publication. https://doi.org/10.1016/j.intell.2021.101526

preprint blog post 1 blog post 2 blog post 3 syntax / data

Machine Learning and Metaheuristics

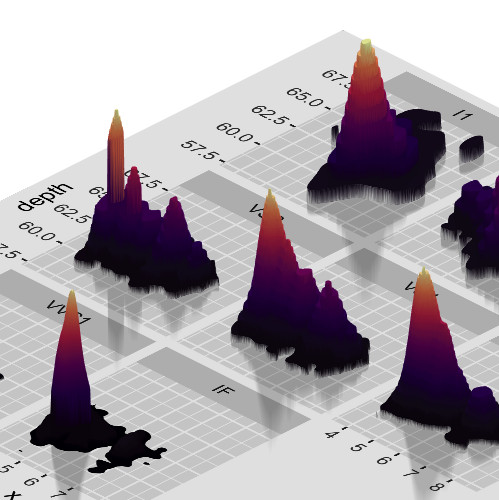

Machine learning algorithms have positively influenced research in various scientific disciplines such as astrophysics, genetics, or medicine. Psychology is also trying to exploit the new possibilities of machine learning, although psychologists often deal with comparatively small samples and fuzzy indicators. Thus, there is a thin line between serious research and hype. In this research area, I’m concered with two things: (a) to evaluate the potential of machine learning algorithm to predict real-world outcomes, and (b) to use metaheuristics to optimize psychological measures.

Machine learning algorithms have positively influenced research in various scientific disciplines such as astrophysics, genetics, or medicine. Psychology is also trying to exploit the new possibilities of machine learning, although psychologists often deal with comparatively small samples and fuzzy indicators. Thus, there is a thin line between serious research and hype. In this research area, I’m concered with two things: (a) to evaluate the potential of machine learning algorithm to predict real-world outcomes, and (b) to use metaheuristics to optimize psychological measures.

To give an impression of the latter line of research: Ant Colony Optimization, which mimics the foraging behavior of ants, is a popular optimization algorithm in computational sciences. Due to its great flexibility it can also be applied to psychological settings, for example, in the construction of short scales: The advent of large-scale assessment and the more frequent use of longitudinal measurement designs led to an increased demand for psychometrically sound short scales. This is often done by simply removing the items with the lowest item-total correlation from the item pool of the long version. We compared the quality and efficiency of such traditional strategies to construct short scales and demonstrated that metaheuristics outperform traditional strategies of item selection.

Selection of recent publications

- Speck, K.-L., Jankowsky, K., Scharf, F., & Schroeders, U. (2025, November 29). Beyond the hype: A simulation study evaluating the predictive performance of machine learning models in psychology. PsyArXiv.

preprint syntax - Schroeders, U., Mariss, A., Sauter, J., & Jankowsky, K. (2025). Predicting juvenile delinquency and criminal behavior in adulthood using machine learning. International Journal of Behavioral Development. Advance online publication. https://doi.org/10.1177/01650254251339392

preprint reg. report syntax - Zimny, L., Schroeders, U., & Wilhelm, O. (2024). Ant Colony Optimization for parallel test assembly. Behavioral Research Methods, 56, 5834–5848. https://doi.org/10.3758/s13428-023-02319-7

open access syntax / data - Schroeders, U., Scharf, F., & Olaru, U. (2023). Model specification searches in structural equation modeling using Bee Swarm Optimization. Educational and Psychological Measurement, Advance online publication. https://doi.org/10.1177/00131644231160552

open access blog post syntax / data BSO algo

Structural Equation Modeling Techniques

Structural Equation Modeling (SEM) is a versatile and powerful statistical tool in the social and behavioral sciences. It is useful in creating and refining psychological measures (i.e., establishing measurement models), but also to evaluate complex theoretical models against empirical data (i.e., testing structural models). My research dealing with SEM evolves along three lines: (a) Measurement invariance testing (MI with categorical indicators, longitudinal MI), (b) Meta-Analytic Structural Equation Modeling (MASEM), and (c) Local Weighted Structural Equation Modeling (LSEM).

Structural Equation Modeling (SEM) is a versatile and powerful statistical tool in the social and behavioral sciences. It is useful in creating and refining psychological measures (i.e., establishing measurement models), but also to evaluate complex theoretical models against empirical data (i.e., testing structural models). My research dealing with SEM evolves along three lines: (a) Measurement invariance testing (MI with categorical indicators, longitudinal MI), (b) Meta-Analytic Structural Equation Modeling (MASEM), and (c) Local Weighted Structural Equation Modeling (LSEM).

Measurement invariance is an important concept of test construction and an essential prerequisite to ensure valid and fair comparisons across cultures, administration modes, language versions, or sociodemographic groups. Often MI is tested in a straightforward procedure of comparing measurement models with increasing parameter restritions across subgroups (e.g., female vs. male). Put simply, if results can be attributed to differences in the construct in question rather than membership to a certain group, researchers speak of an invariant measurement. LSEM provides a flexible extension to model covariance structures in dependence of a continuous context variable. These models turned out to be especially useful in describing the development of skills and abilities, since age can be handled as a continuous variable without artificial categorization into different age groups.

Selection of recent publications

- Schroeders, U., Morgenstern, M., Jankowsky, K., & Gnambs, T. (2024). Short-scale construction using meta-analytic Ant Colony Optimization: A demonstration with the Need for Cognition Scale. European Journal of Psychological Assessment, 40(5), 376–395. https://doi.org/10.1027/1015-5759/a000818

open access syntax / data - Gnambs, T., & Schroeders, U. (2024). Reliability and factorial validity of the Core Self-Evaluations Scale: A meta-analytic investigation of wording effects. European Journal of Psychological Assessment, 40(5), 343–359. https://doi.org/10.1027/1015-5759/a000783

open access syntax / data - Olaru, G., Robitzsch, A., Hildebrandt, A., & Schroeders, U. (2022). Examining moderators of vocabulary acquisition from kindergarten throughout elementary school using Local Structural Equation Modeling. Learning and Individual Differences, 95, Article 102136. https://doi.org/10.1016/j.lindif.2022.102136

open access syntax - Schroeders, U., Kubera, F., & Gnambs, T. (2022). The structure of the Toronto Alexithymia Scale (TAS-20): A meta-analytic confirmatory factor analysis. Assessment, 29(8), 1806–1823. https://doi.org/10.1177/10731911211033894

open access syntax / data